Mastering Text Training for Large Language Models (LLMs)

Nov 23, 2023

In the dynamic world of artificial intelligence, Large Language Models (LLMs) stand at the forefront, heralding a new era of innovation and possibilities. These models, renowned for their proficiency in understanding, generating, and interpreting human language, have redefined our interaction with technology. From automated customer service to sophisticated data analysis, LLMs are reshaping the landscape of AI applications.

As we delve into the complexities of training these text-based LLMs, we aim to equip AI researchers, team leaders, and enthusiasts with insights and practical advice. It’s a journey through the labyrinth of AI, where each turn brings new challenges and opportunities.

LLM Model Architectures

Choosing the right model architecture is a foundational step in training an LLM. This decision impacts everything from performance to scalability. Popular architectures like Transformer, GPT, and BERT each bring unique strengths to the table.

The Transformer architecture, made famous by the “Attention Is All You Need” paper, has become a staple in natural language processing. It’s known for its attention mechanism, which enhances the model’s understanding of context by focusing on different parts of the input data.

GPT models, epitomized by OpenAI’s GPT-3, represent a massive scale-up of the Transformer architecture. They’ve been trained on vast amounts of text, enabling them to generate text that’s coherent and contextually relevant. This versatility makes them suitable for a range of tasks, from content creation to conversation, and even coding.

On the other hand, Google’s BERT (Bidirectional Encoder Representations from Transformers) introduced a new approach to language modeling. BERT’s bidirectional training, as detailed in its foundational paper, allows it to understand the context of words by considering both the words that precede and follow them.

Each of these architectures is suitable for different tasks and requirements. The right choice depends on factors like the complexity of the language, the size of the training dataset, and the specific application for the model.

Pre-Training Challenges and Solutions

Pre-training an LLM is a resource-intensive endeavor that poses several challenges. Key among these are selecting the right training data and managing computational requirements.

Effective pre-training is essential for establishing the fundamental language skills of LLMs. It involves training on large-scale corpora to equip the models with necessary language generation and understanding capabilities. However, this process is not without its hurdles.

Data Selection: The quality and diversity of training data are pivotal in shaping an LLM’s performance. The data must be representative of the contexts the model will encounter. This means meticulously curating datasets that are comprehensive, diverse, and unbiased. To understand more about the importance of dataset diversity, this study provides insights into how different data types can impact an LLM’s training.

Computational Requirements: The computational power needed for training LLMs is substantial. This often requires using specialized hardware and can be both costly and time-consuming. To balance the model size, data volume, and computational resources effectively, understanding different approaches to model optimization can be highly beneficial.

For those seeking a deeper understanding of pre-training challenges and solutions, exploring resources like DeepMind’s blog on language modeling can provide valuable insights.

The Benefits of Custom LLMs

Custom LLMs can provide more accurate and relevant results for specific applications. For instance, a model trained on medical literature and patient histories would be more effective in a healthcare application. The key to building such tailored models lies in creating effective training data.

For insights into customizing LLMs for specific needs, this resource offers an overview of the C4 dataset, which is instrumental in training versatile models.

Creating Effective Training Data

Training data is the lifeblood of your LLM. It’s what teaches your model about the world it’ll be operating in. The key here is quality and diversity. You want data that’s clean, varied, and as unbiased as possible.

Collecting data from a range of sources is crucial. In our medical example, this might include medical journals, patient forums, and clinical trial reports. Cleaning this data helps your LLM learn more efficiently. Ethical data sourcing and respecting privacy laws is not just good practice; it’s essential. Explore more about data collection and cleaning techniques here.

Navigating Ethical Considerations and Avoiding Harmful Content

Ensuring our LLMs are ethically sound and free from harmful biases is a responsibility we can’t overlook. Regular audits of your training data for biases and stereotypes are essential. Having diverse teams involved in the development process can provide different perspectives. Filtering out hate speech and misinformation from your training data is critical. Dive deeper into the ethical considerations in AI with this article from Google AI Blog.

Download Our Comprehensive Whitepaper on LLM Training

If you’re as intrigued by all this as I am, there’s more. We’ve compiled a comprehensive whitepaper on LLM training that’s just waiting to be explored. It’s packed with insights, strategies, and a deeper dive into everything we’ve discussed here and more.

Download Whitepaper

Leveraging Expertise in Data Labeling

Partnering with a pro like SmartOne can make a world of difference in data labeling. SmartOne’s expertise ensures that your training data is precisely annotated, directly translating to the efficiency and effectiveness of your LLM. Discover more about how SmartOne’s data labeling services can turbocharge your LLM training. Contact SmartOne for your data labeling needs.

From understanding model architectures to the fine art of data labeling, we’ve covered a lot in our journey through the world of Large Language Models. The field of AI and LLMs is constantly evolving, and staying informed is key. Keep exploring, keep learning, and most importantly, keep experimenting. The possibilities are endless, and the future is incredibly exciting.

Looking forward to seeing what incredible things you’ll achieve with your newfound knowledge in LLM training. Until next time, happy data modeling!

Recent articles

Generative AI summit 2024

Generative AI summit 2024

Client Case Study: Automated Accounting for Intelligent Processing

Client Case Study: Automated Accounting for Intelligent Processing

Client Case Study: AI Logistics Control

Client Case Study: AI Logistics Control

Client Case Study: Inquiry Filter for a CRM Platform

Client Case Study: Inquiry Filter for a CRM Platform

Client Case Study: Product Classification

Client Case Study: Product Classification

Client Case Study: Interius Farms Revolutionizing Vertical Farming with AI and Robotics

Client Case Study: Interius Farms Revolutionizing Vertical Farming with AI and Robotics

Client Case Study: Virtual Apparels Try-On

Client Case Study: Virtual Apparels Try-On

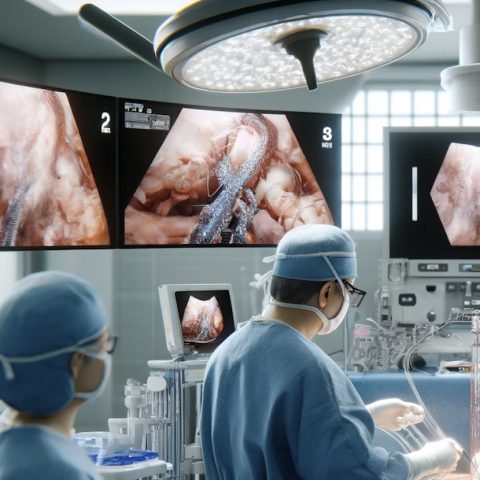

Client Case Study: SURGAR Delivering Augmented Reality for Laparoscopic Surgery

Client Case Study: SURGAR Delivering Augmented Reality for Laparoscopic Surgery

Client Case Study: Drone Intelligent Management

Client Case Study: Drone Intelligent Management

Client Case Study: Query-item matching for database management

Client Case Study: Query-item matching for database management