The Role of Training Data in Natural Language Processing (NLP) AI Models

May 5, 2024

The daily conversational language, known as natural language, presents a wealth of opportunities for AI technologies. If we can teach machines to understand our basic language principles and nuances, it will significantly enhance the potential of tech tools like chatbots.

For several years, chatbots have been a hot topic in the tech world, thanks to the advancement of Natural Language Processing (NLP) technology. But what exactly is NLP, and how does it function? This comprehensive guide aims to provide a deeper understanding of this innovative technology and the pivotal role of training data for its successful implementation.

Deciphering Natural Language Processing

Natural language represents the verbal communication we use in our daily interactions. Until recently, comprehending this language was a challenge for machines. However, AI researchers are now developing technology capable of understanding natural language, thus paving the way for immense potential and future breakthroughs.

There are three fundamental steps in natural language processing:

Decoding the user’s input and interpreting its meaning using specific algorithms.

Deciding the suitable response to the user’s input.

Generating an understandable output response for the user.

Moreover, NLP technology performs three main actions: comprehension, decision-making, and responding.

Comprehension: The machine must first understand the user’s input. This step employs natural language understanding (NLU), a subfield of NLP.

Decision-making: The machine must decide how to react to the user’s input. For instance, if you instruct Alexa to order toilet paper from Amazon, Alexa will comprehend and execute your request.

Responding: Finally, the machine must respond to the user’s input. After Alexa successfully places your order, it should inform you: “I have ordered toilet paper, and it should arrive tomorrow.”

Despite advancements, many NLP applications, such as chatbots, still struggle to match human conversational abilities and often respond with a limited set of phrases.

Sentiment analysis, a method of identifying and categorizing opinions within a text to understand the author’s attitude, is a crucial part of NLP, particularly when building chatbots. This process impacts the “responding” stage of NLP. The same input text might necessitate different responses from the chatbot depending on the user’s sentiment, which must be annotated for the algorithm to learn.

To enhance the decision-making abilities of AI models, data scientists need to provide them with vast amounts of training data. This allows the models to identify patterns and make accurate predictions. However, raw data, such as audio recordings or text messages, is insufficient for training machine learning models. The data must first be labeled and organized into a NLP training dataset.

Role of Data Annotation in Natural Language Processing

Entity annotation involves labeling unstructured sentences with relevant information, enabling machine comprehension. For instance, this could involve tagging all individuals, organizations, and locations in a document. In the sentence “My name is Andrew,” Andrew must be accurately tagged as a person’s name for the NLP algorithm to function correctly.

Linguistic text annotation is also crucial for NLP. Sometimes, AI community members are asked to annotate which words are nouns, verbs, adverbs, etc. These tasks can quickly become complex, as not everyone possesses the necessary knowledge to distinguish the parts of speech.

Does Natural Language Processing Vary Across Languages?

In English, there are spaces between words. However, this isn’t the case in some languages, like Japanese. Although the technology required for audio analysis remains consistent across languages, text analysis in languages like Japanese requires an additional step: separating each sentence into words before individual words can be annotated.

Enhanced Understanding Through NLP Technologies

At the core of these technologies lies the ability to parse and analyze massive volumes of language data. By employing deep learning algorithms, NLP systems can recognize patterns and learn from them, progressively improving their accuracy in understanding user intent. This technology does not just grasp the literal meaning of words but also appreciates the subtleties of context, tone, and emotion, which are crucial for effective communication.

Moreover, advancements in semantic understanding allow NLP systems to perform tasks that require a deeper grasp of human language. Examples include summarizing long documents, generating content, and even translating languages with a high degree of precision. This enhanced understanding is pivotal in developing AI applications that are more accessible, useful, and seamlessly integrated into human workflows.

Data Annotation: Deepening the AI’s Grasp of Language

Data annotation is the labor-intensive but crucial process behind the scenes of every effective NLP application. This meticulous task involves labeling textual data with metadata that describes its linguistic structure, intent, and semantic meaning.

Annotators play a key role as they identify and mark up various elements of the text, such as entities (e.g., people, places, and organizations) and relationships between those entities. They also categorize parts of speech, annotate syntactic dependencies, and label pragmatic features such as tone and style. Each of these annotations helps the AI to “understand” the text in a way that aligns more closely with human understanding.

Furthermore, sentiment analysis services is a sophisticated aspect of data annotation that involves detecting the sentiment conveyed in a text, whether it is positive, negative, or neutral. This ability is crucial for applications like customer service chatbots, which need to respond appropriately to the emotional tone of customer inquiries or complaints. The precision and depth of data annotation directly influence how effectively NLP technologies can engage in and sustain meaningful interactions with users.

The entire process of natural language processing involves developing appropriate operations and tools, collecting raw data for annotation, and engaging both project managers and workers to annotate the data.

For machine learning, the volume of training data is the key to success. It’s also worth noting that while English is the primary language for many top companies conducting AI research, such as Amazon, Apple, and Facebook, the most crucial factor remains the volume of available training data. The volume of training data is equally critical for other AI fields, such as computer vision and content categorization. Moreover, data quality is a significant bottleneck to technological advancement.

So if you and your team need help with some text/NLP data annotation services, please feel free to reach out!

Recent articles

Generative AI summit 2024

Generative AI summit 2024

Client Case Study: Automated Accounting for Intelligent Processing

Client Case Study: Automated Accounting for Intelligent Processing

Client Case Study: AI Logistics Control

Client Case Study: AI Logistics Control

Client Case Study: Inquiry Filter for a CRM Platform

Client Case Study: Inquiry Filter for a CRM Platform

Client Case Study: Product Classification

Client Case Study: Product Classification

Client Case Study: Interius Farms Revolutionizing Vertical Farming with AI and Robotics

Client Case Study: Interius Farms Revolutionizing Vertical Farming with AI and Robotics

Client Case Study: Virtual Apparels Try-On

Client Case Study: Virtual Apparels Try-On

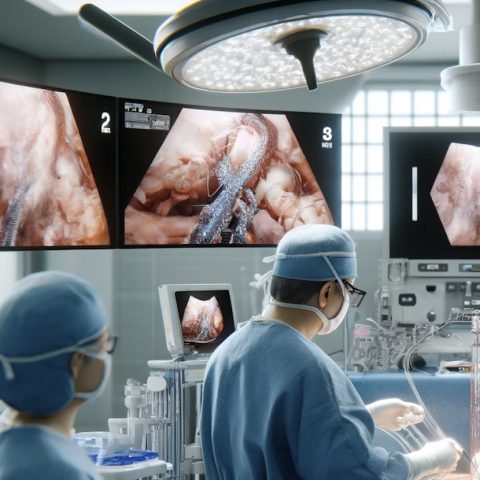

Client Case Study: SURGAR Delivering Augmented Reality for Laparoscopic Surgery

Client Case Study: SURGAR Delivering Augmented Reality for Laparoscopic Surgery

Client Case Study: Drone Intelligent Management

Client Case Study: Drone Intelligent Management

Client Case Study: Query-item matching for database management

Client Case Study: Query-item matching for database management