The Must Read Guide to Training Data in Natural Language Processing

May 17, 2024

Everyday conversations hold immense potential for artificial intelligence. By teaching machines to understand and respond to natural language, technologies such as chatbots are significantly enhanced, broadening the capabilities of tech tools across industries. Our helpful guide aims to go deeper into Natural Language Processing (NLP) to unfold how this technology works and why training data is crucial for its successful deployment.

So, What Exactly is Natural Language Processing (NLP)?

Simply put, Natural Language Processing, or NLP, is a field of artificial intelligence focusing on the interaction between computers and humans through natural language. The ultimate objective of NLP is to read, decipher, understand, and make sense of human languages in a valuable manner.

The Key Components of NLP:

NLP involves several critical steps to function effectively:

Decoding the User’s Input: The first step is interpreting what the user says in a form the machine can understand.

Decision-Making: Next, the machine uses algorithms to decide the appropriate response based on the user input.

Responding: Finally, the machine generates a response that the user can understand.

Moreover, these steps are underpinned by three main actions: comprehension, decision-making, and responding. For example, when you ask Alexa from Amazon to order toilet paper, Alexa comprehends the request, decides the best way to fulfill it, and confirms the action by responding. Check out our popular article on How to Annotate Audio Data for Machine Learning Model Training to learn more about how Alexa and other AI assistants can be set up.

The Critical Role of Training Data in NLP:

Training data is the core of any NLP system. This data teaches AI models how to understand and interact with human language. But what makes good training data?

Good training data must be:

Voluminous: The more data, the better, allowing the model to learn from many scenarios.

Varied: Diversity in data helps the model handle different dialects, slang, and contexts.

Accurate: High-quality annotations are crucial for teaching the model correctly.

Training data can come from various sources, like our 12 Best & Free Natural Language Processing Datasets, web scraping, and proprietary data collections. However, more than raw data is needed. It must be cleaned, labelled, and organized into a structured format that models can learn from.

Data Annotation: The Backbone of Effective NLP

Data annotation is a critical process in developing NLP systems that involves tagging training data with labels that describe its linguistic structure, intent, and semantic meanings. This meticulous task is the foundational step in teaching AI models how to interpret human language accurately. Practical data annotation encompasses several layers:

Entity Annotation: This annotation type focuses on identifying and labelling specific entities within the text, such as people, places, organizations, and dates. For example, in the sentence “George Washington was born in Virginia,” “George Washington” would be tagged as a person and “Virginia” as a location. This helps the NLP system recognize and categorize entities correctly, which is crucial for tasks like information extraction and entity linking.

Part-of-Speech (POS) Tagging: Every word in a sentence is labelled according to its part of speech, whether a noun, verb, adjective, etc. This annotation layer helps the model understand grammatical structures and how words in a sentence relate, enhancing the model’s ability to parse text and understand syntax.

Syntactic Dependency Annotation: involves marking the relationships between words that form a sentence’s structure. For instance, in the sentence “The cat sat on the mat,” dependency annotations would indicate that “sat” is the main verb, with “the cat” as the subject and “on the mat” as the location. Understanding these dependencies is crucial for the AI to grasp sentence structure and meaning.

Semantic Annotation: Semantic annotations involve tagging words or phrases with their meanings, which helps the AI understand context and ambiguities in language usage. This could include linking a word to its meaning in a knowledge base or annotating the sentence to reflect implied meanings or emotions.

Pragmatic Annotation: Beyond the literal meaning, pragmatic annotations consider language’s context and intended impact. This includes tone, politeness, and style, critical for applications like dialogue systems where the machine needs to mimic human-like interactions.

Each annotation contributes to a comprehensive understanding of the text, enabling NLP models to perform complex tasks such as question answering, machine translation, and sentiment analysis more effectively. The precision and depth of these annotations directly influence the performance of NLP technologies, making data annotation an indispensable part of the AI development process.

The Impact of Data Annotation on Model Accuracy:

The precision of data annotation significantly impacts the performance of NLP models. Accurate data annotations are crucial as they influence the model’s ability to interpret, learn from, and respond to human language effectively. Here’s a deeper look at how data annotation affects model accuracy:

Enhanced Model Training: Well-annotated data provides clear examples for training AI models. For instance, in sentiment analysis, the annotation determines whether a phrase is positive, negative, or neutral. This training allows models to learn the nuances of human emotions and respond appropriately. If a model is trained with poorly annotated data, its ability to correctly interpret sentiment is compromised, leading to inaccurate responses and a poor user experience.

Consistency Across Varied Inputs: Data annotation must be consistent to ensure the model performs reliably across different datasets and real-world scenarios. This is especially important in tasks such as language translation or content moderation, where inconsistent annotations can lead to significant errors in output. For instance, if the sentiment ‘frustration’ is sometimes labelled as ‘anger,’ the model might fail to differentiate these subtly different emotions, affecting the accuracy of interaction and analysis.

Contextual Understanding and Response Generation: In complex dialogue systems, the context in which a word or phrase is used can vastly change its meaning. For instance, “I’m fine” can be straightforward or sarcastic, depending on context and tone. Precise annotations that include contextual and tonal indicators help models understand and generate contextually appropriate responses. This is particularly critical in customer service bots and interactive AI, where responding aptly to the mood and context of the user can define the quality of service.

Adapting to User Feedback: Accurate data annotation allows NLP systems to better adapt to user feedback. For instance, high-quality annotations enable more effective adjustments in interactive learning scenarios where the model adjusts based on user interactions. This iterative process, known as active learning, depends heavily on the initial annotations to guide the learning algorithms correctly.

Reducing Bias and Enhancing Fairness: Finally, precise data annotation is pivotal in reducing biases in NLP models. Biased data can lead AI systems to develop skewed understandings of specific phrases or concepts, perpetuating stereotypes or leading to discriminatory outcomes; by ensuring that data annotations are diverse and representative of various demographics, models can be trained to be fairer and more objective.

The overall impact of data annotation on model accuracy cannot be overstated. It underpins the model’s ability to perform its intended tasks accurately and efficiently, making it a cornerstone of successful NLP applications.

NLP Across Different Languages:

The foundational technology behind Natural Language Processing remains consistent across languages. Yet, the application of this technology must be specially adapted to accommodate the unique linguistic features of each language. Languages like Japanese, which do not separate words with spaces, present specific challenges that necessitate advanced adaptations in text analysis.

Segmentation Challenges: In languages such as Japanese and Chinese, the absence of clear word boundaries requires sophisticated segmentation algorithms. These algorithms are designed to accurately identify where one-word ends and another begins, a process known as tokenization. Effective tokenization is critical because it forms the basis for all subsequent NLP tasks, such as parsing and part-of-speech tagging.

Contextual Nuances: Beyond structural differences, linguistic nuances also affect how NLP technologies are applied. For instance, many languages feature extensive inflectional systems where the form of a word changes to express different grammatical categories such as tense, case, or gender. With its relatively simple inflectional scheme, systems developed for English may struggle with the complexity of languages like Russian or Arabic, requiring modifications to handle these intricacies.

Cultural Contexts: Cultural differences also significantly affect how language is used and understood. Idioms, colloquialisms, and cultural references can vary dramatically between languages and regions. NLP systems must be trained with culturally relevant examples to ensure that they understand and generate appropriate and contextually accurate language.

Script Diversity: A language’s script can also impact NLP processing. Languages that use non-Latin scripts, such as Arabic, Hindi, or Korean, often require specialized optical character recognition (OCR) technology and font handling. Each script has its own set of characters and rules for character formation and combination, adding another layer of complexity to text processing.

Adaptation Strategies: NLP researchers and developers employ various strategies to overcome these challenges. These include the development of language-specific models and training datasets, using universal language models that are fine-tuned for specific languages, and incorporating multilingual training data that helps models learn to transfer knowledge from one language to another.

Addressing these challenges can make NLP technologies more accurate and effective in language processing, making AI systems accessible and useful to a global audience. This adaptability not only enhances the functionality of AI applications but also broadens their impact across different linguistic and cultural landscapes.

Advancements in NLP Technology:

As NLP technologies advance, they use deep learning to parse and analyze large volumes of language data. This allows NLP systems to understand the literal meaning of words and appreciate subtleties of context, tone, and emotion—key for effective communication.

Training data is at the heart of NLP’s success. As technologies advance, the demand for high-quality, well-annotated training data like we at SmartOne AI specialize in, will only increase. The better we can equip our AI models with good training data, the more sophisticated and valuable they will become.

Whether you’re a data scientist, developer, project/product lead or an AI enthusiast, understanding the critical role of training data in NLP can help you appreciate the complexities and capabilities of modern AI technologies. If you have questions or thoughts about NLP, please don’t hesitate to reach out to us. We are always happy to chat with those in or exploring the AI field.

Recent articles

Generative AI summit 2024

Generative AI summit 2024

Client Case Study: Automated Accounting for Intelligent Processing

Client Case Study: Automated Accounting for Intelligent Processing

Client Case Study: AI Logistics Control

Client Case Study: AI Logistics Control

Client Case Study: Inquiry Filter for a CRM Platform

Client Case Study: Inquiry Filter for a CRM Platform

Client Case Study: Product Classification

Client Case Study: Product Classification

Client Case Study: Interius Farms Revolutionizing Vertical Farming with AI and Robotics

Client Case Study: Interius Farms Revolutionizing Vertical Farming with AI and Robotics

Client Case Study: Virtual Apparels Try-On

Client Case Study: Virtual Apparels Try-On

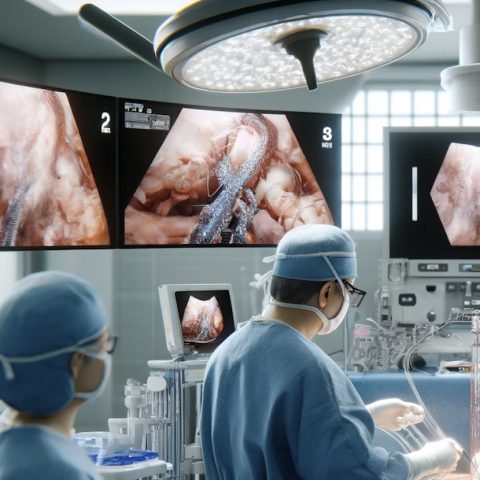

Client Case Study: SURGAR Delivering Augmented Reality for Laparoscopic Surgery

Client Case Study: SURGAR Delivering Augmented Reality for Laparoscopic Surgery

Client Case Study: Drone Intelligent Management

Client Case Study: Drone Intelligent Management

Client Case Study: Query-item matching for database management

Client Case Study: Query-item matching for database management