How to Annotate Audio Data for Machine Learning Model Training

May 2, 2024

So What Exactly is Audio Data Annotation?

Annotation of audio data is a process whereby the description or tags appended to the audio files allow machine learning models to perceive or interpret sound. It represents an important step in fine-tuning AI models for speech recognition and audio event detection applications.

The Buzz about Audio Annotation

Imagine living in a world where technology can discern a whisper in a storm, recognize the nuanced differences between thousands of bird songs, or even predict a machinery failure before it happens. This isn’t a scene from a futuristic novel; it’s the reality we’re steering toward with the power of AI audio data annotation or audio labeling. A robust foundation of annotated audio data is indispensable for training AI models to recognize voices, music genres, or specific sounds in noisy environments.

Enhancing User Experience with Voice-Activated Services

In voice-activated services, audio data annotation plays a pivotal role. Virtual assistants like Apple Siri , Amazon Alexa , or Google Assistant have become ubiquitous in our daily lives, assisting us with everything from setting alarms to providing real-time weather updates. For these AI systems to understand and respond to a myriad of voice commands accurately, they require extensive training on datasets where human speech is meticulously labelled, noting variations in accent, pitch, and intonation.

Revolutionizing Healthcare through Audio Analysis: The healthcare sector stands to gain immensely from advancements in audio annotation . AI models are now trained to recognize coughs, breathing patterns, and even subtle voice changes that may indicate health issues such as infections, respiratory problems, or changes in mental health states. Annotated audio data enables these models to differentiate between a wide range of sounds for diagnostic purposes, potentially offering non-invasive, early detection of conditions.

Advancing Security Measures with Sound Recognition: Security systems increasingly leverage audio data annotation to enhance their capabilities. AI models can provide real-time alerts by annotating audio data to include sounds that could indicate intrusions, such as breaking glass, alarms, or unexpected activity in restricted areas. This application is not limited to physical security; it extends to cybersecurity, where anomalous sounds within data centers could indicate hardware failures or unauthorized access.

Transforming Environmental Research with Bioacoustic Monitoring: Bioacoustic monitoring relies heavily on annotated audio data in environmental research. Scientists annotate recordings of natural habitats to study biodiversity, monitor endangered species, and even detect illegal logging activities by recognizing chainsaw sounds. This data helps understand ecological dynamics, assess habitat health, and guide conservation efforts.

Improving Public Safety with Emergency Detection Systems : AI systems are also being trained to recognize audio cues that could indicate emergencies, such as car crashes, screams, or gunshots. Annotated audio data allows these systems to discern these critical sounds from background noise, enabling quicker response times for emergency services and potentially saving lives.

Types of Audio Annotation

Enhancing our understanding of the types of AI audio annotations broadens our appreciation of the complexity involved in audio data annotation . It highlights these annotations’ critical role in training AI systems for various tasks for audio recording.

Speaker Identification: Speaker identification involves tagging audio recordings to indicate which individual is speaking at any given time. This process is crucial for applications such as automated meeting transcriptions, where statements must be attributed to the correct participant, or in security, where identifying a speaker’s voice can authenticate their identity. Advanced algorithms analyze vocal characteristics unique to individuals, such as pitch, tone, and speech patterns, to distinguish between speakers.

Emotion Recognition: Emotion recognition seeks to understand the emotions conveyed through voice tones, an area that’s gaining traction in customer service and mental health applications. AI models can detect underlying emotions like happiness, sadness, anger, or stress by analyzing pitch, speed, and intonation variations. This capability enables call centers to improve customer interaction by routing calls based on emotional cues or helps monitor patients’ mental health by assessing their emotional state over time.

Speech-to-Text Transcription: Speech-to-text transcription converts spoken words into written text, the backbone for training language models. This transcription service is vital for creating datasets that fuel natural language processing (NLP) applications, from voice-activated assistants to real-time captioning services. Accuracy in this process is paramount, as the quality of transcribed text directly affects the AI model’s ability to understand and generate human-like responses. If Speech-to-text transcription is something you want to test out for yourself, jump over to this article on the best note taking AI tools.

Sound Event Detection: Sound event detection involves recognizing and categorizing specific sounds or events within audio streams. This type encompasses various applications, from environmental monitoring (identifying sounds of particular animal species) to urban planning (analyzing traffic patterns through sound). The challenge here lies in accurately identifying sounds within complex acoustic environments, requiring sophisticated machine-learning models trained on diverse and detailed annotations.

Two Great Tools for Audio Data Annotation

Audacity stands out as a flexible and powerful audio annotation editor, widely used for its broad range of features that cater to various audio annotation needs. Users can record live audio, cut, copy, splice, or mix sounds together, and apply effects to audio recordings. Its accessibility and comprehensive audio file editing tools make it an indispensable resource for preparing audio datasets for annotation.

Sonic Visualiser is designed for visual sonification and measurement of audio files, offering a unique approach to analyzing and annotating audio data. It allows users to view and explore the spectral content of audio files, making it easier to identify and label specific sound events. This tool is handy for research purposes, where detailed analysis and categorization of sound features are required.

Preparing Data for Audio Annotation

Preparing data for audio annotation involves a meticulous approach. It emphasizes collecting and labelling audio recordings of the audio file and carefully planning and executing each step to ensure the audio annotation data’s quality and relevance for AI training purposes.

Curating an Audio Dataset: The foundation of any audio annotation project is curating a diverse set of audio recordings. This collection should encompass a wide range of sound environments, speakers, dialects, and sound events reflective of the real-world scenarios the AI model will encounter. For instance, if developing a voice assistant, the dataset might include recordings from different age groups, accents, and background noises. The goal is to ensure the model’s robustness and ability to perform accurately across varied audio inputs.

Defining Annotation Categories: Before beginning the annotation process, it is crucial to determine the categories annotators will label. These categories should align with the objectives of the AI model and include identifying different speakers through speech annotation, recognizing specific emotions conveyed through voice, or detecting distinct sound events. Clear definitions and examples for each category help annotators understand the nuances of their labelling, ensuring consistency and accuracy in the annotations.

The Annotation Process

The annotation process involves actively labelling audio segments and audio classification according to the predefined categories. This step requires annotators to listen to audio recordings and mark segments with relevant tags, sometimes adding timestamps and additional context to aid the AI model’s learning. Utilizing annotation software that allows for the visual representation of sound waves can enhance precision, enabling annotators to identify and label specific audio features more effectively.

Accuracy in audio annotation is paramount; thus, verification and quality checks are essential in the preparation process. This phase involves reviewing the annotated data to validate its correctness and consistency. Employing a second set of ears to cross-check annotations helps identify and rectify discrepancies. Additionally, leveraging automatic validation tools can flag potential errors for human reviewers, streamlining the quality assurance process.

Finally, transforming annotated data into a format suitable for AI training datasets is critical. This process involves converting annotations into a structured format, such as JSON or XML, that aligns with the requirements of the machine learning models to be trained. Ensuring the data is clean, well-organized, and compatible with AI training platforms facilitates smooth and efficient audio annotation model training.

Addressing Complexity in Sound Event Detection

Distinguishing between myriad sound events in audio recordings, especially in environments rich with overlapping sounds, presents a formidable challenge. Imagine trying to identify the sound of a specific bird species in a bustling rainforest or distinguishing between different types of vehicle engines in urban traffic; the complexity is staggering. This complexity isn’t just about the variety of sounds but also involves robust data collection, variations in volume, distance from the sound source, and interference from background noises.

The key to tackling this complexity lies in employing advanced machine learning models and a quality audio annotation tool capable of deep audio analysis. Techniques such as deep learning and neural networks have shown remarkable success in extracting features from complex datasets, enabling models to recognize sound patterns hidden beneath layers of noise. By training these models on vast, well-annotated datasets, they learn to identify the subtle differences that distinguish one sound event from another.

Quality checks are also paramount in ensuring the reliability of sound event detection systems. This process involves verifying the accuracy of sound annotations in training datasets and continuously testing the model’s performance under varied conditions. Implementing robust quality control mechanisms helps refine the model’s ability to handle complex sound environments, minimize errors, and improve detection precision.

Teaching Your AI Model to Recognize Intricate Sound Patterns

Educating AI models to discern intricate sound patterns requires a strategic approach to training; this involves:

Curating Diverse Audio Datasets: Assemble datasets representing audio environments and sound events. Diversity in training data is crucial for developing a model’s ability to generalize from its training and perform accurately in real-world scenarios.

Simulating Real-World Conditions: Incorporate simulations of real-world audio complexities, such as varying sound levels, echo, and background noise, into the training process. This helps the model adapt to the unpredictability of real-life sound detection tasks.

Incremental Learning: Start with simpler sounds and gradually introduce complexity, allowing the AI to build on its learning progressively. This stepwise approach helps solidify the model’s capabilities at each level before moving on to more challenging scenarios.

Feedback Loops: Implement feedback mechanisms that allow the model to learn from its mistakes. By analyzing instances of misidentification or missed detections, the model can adjust its parameters for better future performance.

AI Audio Data Annotations Potential & Ongoing Evolution

The potential applications for AI audio data annotation are as vast as vital. From creating more intuitive user interfaces to safeguarding our health and environment, the detailed process of annotating audio data is at the heart of these technological advancements. As AI continues to evolve, the demand for accurately annotated audio datasets will only grow, underscoring the importance of this meticulous task in shaping a future where technology understands not just data but the world around us through sound.

As we’ve shown, data annotation services reveals its critical role across various sectors, emphasizing its significance in developing more responsive AI systems that are more attuned to the nuances of the auditory world. For those of you still diving even more profoundly, we hope this article broadened your knowledge base, and we wish you…Happy AI audio listening!

Recent articles

Generative AI summit 2024

Generative AI summit 2024

Client Case Study: Automated Accounting for Intelligent Processing

Client Case Study: Automated Accounting for Intelligent Processing

Client Case Study: AI Logistics Control

Client Case Study: AI Logistics Control

Client Case Study: Inquiry Filter for a CRM Platform

Client Case Study: Inquiry Filter for a CRM Platform

Client Case Study: Product Classification

Client Case Study: Product Classification

Client Case Study: Interius Farms Revolutionizing Vertical Farming with AI and Robotics

Client Case Study: Interius Farms Revolutionizing Vertical Farming with AI and Robotics

Client Case Study: Virtual Apparels Try-On

Client Case Study: Virtual Apparels Try-On

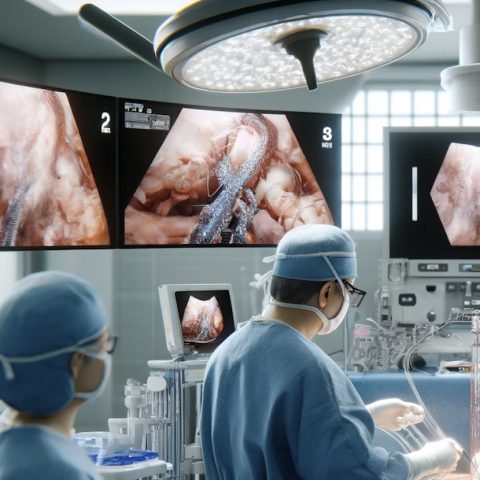

Client Case Study: SURGAR Delivering Augmented Reality for Laparoscopic Surgery

Client Case Study: SURGAR Delivering Augmented Reality for Laparoscopic Surgery

Client Case Study: Drone Intelligent Management

Client Case Study: Drone Intelligent Management

Client Case Study: Query-item matching for database management

Client Case Study: Query-item matching for database management