Enhancing Large Language Models with Data Labeling & Training (Whitepaper)

Dec 21, 2023

Table of Contents

I. The Rise of Large Language Models (LLMs)

Recent years have seen phenomenal growth in the field of natural language processing, marked by the advent of groundbreaking Large Language Models such as GPT-3 (Generative Pre-trained Transformer 3) and BERT (Bidirectional Encoder Representations from Transformers). These technologies have changed the way computers interpret and generate human language, paving the way for significant advancements across various domains including artificial intelligence (AI), machine translation, and virtual assistants. This has resulted in their widespread adoption across diverse industries ranging from healthcare and finance to entertainment and customer service, transforming business operations and customer interactions (Devlin et al., 2019; Brown et al., 2020).

Despite their impressive capabilities, LLMs are not without their challenges. Certain limitations continue to impede their potential, underscoring the need for robust and accurately labeled data. This white paper delves into the crucial role of data in harnessing the full capabilities of LLMs, and how SmartOne addresses these challenges through best practices in data labeling and annotation.

II. Advanced Model Architectures in LLMs

As LLMs continue to revolutionize the field of natural language processing, the underlying model architectures play a pivotal role in their capabilities and performance. This section delves into the cutting-edge architectures that are driving the current and future advancements in LLMs.

A. Evolution of Model Architectures

The evolution of LLM architectures has been marked by significant milestones. Early models relied on simpler neural networks, but the advent of deep learning brought more sophisticated structures. The introduction of Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks allowed for better handling of sequential data, a crucial aspect of language processing. However, these models often struggled with long-term dependencies and computational efficiency.

The breakthrough came with the development of the Transformer architecture, introduced in the paper “Attention Is All You Need” by Vaswani et al. (2017). Transformers abandoned the recurrent layers in favor of attention mechanisms, enabling the model to process input data in parallel and focus on different parts of the input sequence, enhancing both efficiency and context understanding.

B. Transformer Models: The Core of Modern LLMs

Transformer models have become the backbone of modern LLMs, including notable examples like GPT and BERT. These models have several key features:

Self-Attention Mechanism: This allows the model to weigh the importance of different words in a sentence, providing a dynamic understanding of the context.

Layered Structure: Multiple layers of attention and feed-forward networks enable the model to learn complex patterns and relationships in the data.

Pretraining and Fine-Tuning: Transformer models are often pretrained on vast datasets and then fine-tuned for specific tasks, making them highly versatile.

C. Beyond Transformers: Emerging Architectures

While Transformers currently dominate the field, research continues to evolve:

Efficient Transformers: Variants like the Reformer or Linformer aim to reduce the computational complexity of standard Transformer models, enabling them to handle longer sequences more efficiently.

Hybrid Models: Combining aspects of Transformers with other neural network architectures to leverage the strengths of each.

Graph Neural Networks (GNNs): For tasks that involve understanding relationships and structures, such as in knowledge graphs, GNNs are being explored as an adjunct or alternative to Transformer models.

D. Challenges and Future Directions

Despite their successes, Transformer models face challenges like computational intensity, especially when scaling up to process large datasets. There’s also an ongoing effort to make these models more interpretable and to understand their decision-making processes.

Future research is focusing on creating more efficient, adaptable, and transparent models. This includes exploring new architectures that can handle diverse and multimodal data, and models that can learn more effectively with less data – a move towards more data-efficient AI.

III. The Foundation: Data in LLMs

At the core of LLMs’ success lies their training data. These models are fed vast and diverse datasets comprising text from various sources such as the internet, books, and articles. The quality and characteristics of this training data are of utmost importance as they influence the model’s performance, ethical considerations, and potential biases that may arise.

A. The Necessity of Quality Data

The essence of AI lies in its ability to process vast amounts of data, and LLMs are no exception. These models rely on extensive text data to learn and understand the intricacies of human language, including its patterns, context, and subtle nuances. The quality of this data is a key determinant of the model’s performance and its efficacy in real-world applications (Vaswani et al., 2017). Poor labeled or inaccurate data can lead to incorrect conclusions, biased models, and ultimately, unreliable AI systems.

B. Data Quality’s Influence on Performance and Reliability

The reliability and performance of LLMs are intrinsically linked to the quality of their training data. Inaccuracies or inconsistencies in the data can result in errors and biases, compromising the AI system’s reliability (Bender et al., 2021). Ensuring high-quality, accurately labeled datasets is therefore a top priority, as it enables LLMs to generate coherent and contextually relevant text. Addressing data quality concerns is fundamental to unlocking the full potential of LLMs in various applications.

IV. Data Labeling and Annotations

Data labeling and annotation are key processes in training LLMs. This involves assigning relevant labels or annotations to raw data, which aids the AI models in understanding the context, intent, and semantics within text data. These annotations provide the necessary context and semantics for the models to generate coherent and meaningful responses (Brown et al., 2020).

A. Understanding the Limitations of Current LLMs

Current LLMs, despite their advancements, have several limitations that affect their overall performance. These include biases in responses, a lack of contextual understanding, and the need for more comprehensive training data. Tackling these limitations is crucial for unleashing the full potential of LLMs across various applications, from customer service chatbots to content generation platforms (Bender et al., 2021).

B. The LLM Training Process

The training process for LLMs is a multi-step procedure that involves data collection, data pre-processing, annotation, and model training. Each step plays a critical role in ensuring the model’s effectiveness. The process begins with collecting a diverse and representative dataset, followed by cleaning and formatting the data during pre-processing. Annotation then involves labeling the data to teach the model about language understanding and context. Finally, the model is trained using deep learning techniques to recognize patterns in the data (Vaswani et al., 2017).

C. Best Practices in Data Labeling and Annotation

In the realm of data labeling and annotation, it is important to follow best practices to ensure high-quality outputs. These include implementing stringent quality control measures, validating the data regularly, and balancing between human annotation and automated methods. Additionally, scalable annotation platforms and outsourcing options can be explored to efficiently handle large datasets (Brown et al., 2020).

V. Challenges and Solutions in Data Labeling

Data labeling, the process of identifying and tagging raw data for AI models, is a critical but often challenging aspect of training LLMs. This section discusses common challenges in data labeling and how SmartOne’s innovative solutions address these issues, enhancing the accuracy and efficiency of LLMs.

A. Challenge: Volume and Scalability

One of the primary challenges in data labeling is managing the immense volume of data required to effectively train and fine-tune Large Language Models. This data must not only be labeled but also validated and possibly re-labeled as models evolve, especially when adapting to advanced training methodologies.

Solution: Automated Tools and Scalable Platforms

To tackle the significant volume of data required for training and fine-tuning LLMs, SmartOne adopts a versatile approach. We leverage a combination of our own automated tools, third-party resources, and exclusive tools accessible through our technology partnerships. This flexible approach allows us to efficiently handle large-scale data labeling projects, especially those involving sophisticated fine-tuning methods such as Reinforcement Learning from Human Feedback (RLHF) and Supervised Finetuning (SFT), as well as prompt-based training. These methodologies are integral to enhancing the performance and applicability of LLMs in diverse scenarios, requiring meticulous data labeling and validation processes that our platforms are well-equipped to handle.

B. Challenge: Maintaining High-Quality and Accuracy

Quality is paramount in data labeling, as inaccuracies can lead to biases and errors in the final AI model.

Solution: Rigorous Quality Control and Expert Annotators

SmartOne implements stringent quality control measures at every stage of the labeling process. Expert annotators are employed to oversee the accuracy of labeled data, and regular audits are conducted to ensure the highest quality standards. Training programs for annotators are continually updated to keep pace with evolving data requirements.

C. Challenge: Data Diversity and Representation

Ensuring diverse and representative datasets is critical to prevent biases in AI models. However, achieving this diversity in data collection and labeling can be complex.

Solution: Diverse Data Sourcing and Inclusive Labeling Practices

SmartOne is committed to challenging and enhancing diversity and inclusiveness in our projects. Our diverse team of annotators, mainly from Africa, brings a breadth of perspectives to the data labeling process. This diversity is not just geographical but also encompasses various cultural and linguistic backgrounds, ensuring the data we label is inclusive and culturally relevant. This approach is crucial in developing LLMs that are fair, unbiased, and truly global in their understanding.

D. Challenge: Complex and Specialized Data Requirements

LLMs in specialized fields like healthcare or finance require data that is not only accurately labeled but also complies with industry-specific standards and regulations.

Solution: Domain-Specific Expertise and Compliance

SmartOne collaborates with domain experts to understand the nuances of specialized fields. These experts guide the labeling process to ensure that the data meets both the technical requirements of LLMs and the regulatory standards of the industry. Compliance teams are also in place to regularly review and update data handling practices.

E. Challenge: Keeping Pace with Evolving AI Models

As AI models evolve, particularly in the realm of fine-tuning methods like RLHF, SFT, and prompt-based training, the data labeling requirements also change. Adapting to these evolving needs is a continuous challenge.

Solution: Agile Methodologies and Continuous Learning

SmartOne employs agile methodologies, allowing for swift adaptation to the latest fine-tuning techniques in LLM training. Our continuous learning programs for annotators are regularly updated to encompass new developments in RLHF, SFT, and prompt-based training, ensuring our data labeling processes remain aligned with the cutting-edge advancements in AI and LLMs.

VI. Federated Learning for Privacy-Preserving LLMs

LLMs are integral to various applications, yet their training often involves massive datasets, raising significant privacy concerns. Traditional centralized training methods necessitate pooling diverse data into a central server, which can pose potential risks if sensitive information is involved. Addressing these concerns is paramount for fostering user trust and ensuring responsible AI development.

A. Privacy Concerns in LLM Training

Federated Learning emerges as an innovative solution to the privacy challenges inherent in LLM training. This collaborative approach enables models to be trained across decentralized devices or servers without the exchange of raw data. Instead, only model updates are communicated, thereby preserving the privacy of individual user inputs. This approach not only enhances privacy but also mitigates the risks associated with centralizing sensitive information.

B. Key Components of Federated Learning

Decentralized Training Servers: In a federated learning setup, training occurs on local servers or devices, obviating the need for a central server to host the entire dataset. This decentralized structure significantly minimizes the chances of a data breach.

Secure Model Aggregation: Model updates from local servers are securely aggregated to create a global model. Advanced cryptographic techniques ensure that individual updates remain private during this aggregation process.

Differential Privacy Measures: Federated Learning often incorporates differential privacy, adding noise to individual updates to prevent the extraction of specific information. This statistical privacy measure adds an extra layer of protection to sensitive data.

C. Benefits of Federated Learning for LLMs

Privacy Preservation: The foremost advantage of Federated Learning is the preservation of user privacy. This approach enables LLMs to learn from a diverse range of data without compromising the confidentiality of individual contributions.

Reduced Data Transfer: Since only model updates are communicated, the amount of data transferred between devices or servers is significantly reduced compared to traditional centralized training. This is particularly beneficial for users with limited bandwidth.

Edge Device Training: Federated Learning supports training models on edge devices, such as smartphones or IoT devices. This empowers devices to learn locally and contribute to the global model without exposing sensitive information.

D. Challenges and Future Directions

Despite its promising potential, Federated Learning faces challenges such as communication efficiency, model synchronization, and robustness to non-IID (Non-Independently and Identically Distributed) data distributions. Future directions include optimizing federated algorithms, addressing security concerns, and promoting standardized frameworks for interoperability.

Federated Learning stands as a pioneering privacy-preserving paradigm for training Large Language Models. By facilitating collaboration without compromising sensitive data, this approach aligns with ethical AI practices and contributes to the development of responsible and trustworthy language models.

VII. Multimodal Data Labeling

The integration of multimodal data—combining text with images, audio, or other sensory inputs—has become increasingly vital for advancing LLMs. Multimodal learning enhances the model’s understanding of context and provides a more comprehensive basis for generating nuanced and contextually relevant responses (Vaswani et al., 2017).

A. Challenges in Multimodal Data Labeling

Effectively leveraging multimodal data requires precise and diverse labeling. This poses unique challenges due to the varied nature of multimodal inputs. Unlike text, which is inherently present in LLM training, other modalities demand specialized annotation processes. Images may require object recognition, audio needs transcription, and video necessitates frame-level labeling. Achieving accurate and comprehensive labeling across these diverse modalities is essential for training robust multimodal LLMs.

B. Strategies for Multimodal Data Labeling

Transfer Learning for Modalities: Leveraging pretrained models for individual modalities, such as image classifiers or speech recognition models, can significantly reduce the labeling effort. This transfer learning approach allows the LLM to benefit from existing labeled datasets for specific modalities (Pan & Yang, 2010).

Crowdsourcing and Expert Annotation: Combining the power of crowdsourcing with expert annotation ensures both diversity and accuracy in multimodal data labeling. Crowdsourced annotators can handle large volumes of data, while domain experts contribute nuanced insights, particularly in specialized fields (Howe, 2006).

Active Learning for Multimodal Inputs: Implementing active learning strategies for multimodal data involves iteratively selecting examples that are challenging for the model. By focusing on instances where the model exhibits uncertainty, this approach optimizes the labeling process, ensuring that efforts are directed where they are most needed (Settles, 2009).

C. Benefits of Multimodal Learning for LLMs

Contextual Understanding: Multimodal data enriches the LLM’s contextual understanding by considering a broader spectrum of information. This leads to more nuanced responses that integrate information from various modalities.

Real-World Applications: The application of multimodal learning extends to real-world scenarios such as image captioning, voice-activated assistants, and content generation. LLMs trained on multimodal data excel in tasks that require a holistic understanding of diverse inputs.

VIII. Integrating Synthetic Data in LLM Training

In recent advancements within the realm of LLMs, the emergence of synthetic data stands out as a significant innovation. Synthetic data, artificially generated data that mimics real-world data, offers a new frontier in training LLMs. It addresses challenges like data scarcity, privacy concerns, and the need for diversified data sets.

A. The Role of Synthetic Data in Enhancing LLMs

Synthetic data can substantially augment the training material for LLMs, particularly in scenarios where real data is limited or sensitive. By generating realistic, yet artificial, datasets, LLMs can be exposed to a wider array of scenarios and linguistic variations. This broadens their understanding and responsiveness, making them more versatile and robust in their applications.

B. Synergy with Human-in-the-Loop Annotations

While synthetic data opens new avenues in LLM training, it doesn’t replace the nuanced understanding and contextual insights provided by human annotators. Here, SmartOne’s data labeling services play a crucial role. Human-in-the-loop annotations ensure that the synthetic data is aligned with real-world contexts and cultural nuances, bridging the gap between artificial and authentic human interactions.

C. Reinforcement Learning with Human Feedback (RLHF)

The integration of synthetic data with human feedback creates a dynamic training environment for LLMs. Human annotators from SmartOne can provide essential feedback, correcting and refining the model’s understanding based on the synthetic data. This iterative process enhances the model’s accuracy, ensuring that the LLMs are not only data-rich but also contextually aware and ethically aligned.

D. Positioning SmartOne in the Era of Synthetic Data

In the evolving landscape of LLM training, SmartOne positions itself as an indispensable partner. By offering expert data labeling services that complement synthetic data, SmartOne ensures that LLMs trained with both real and synthetic data maintain a high standard of reliability, contextual understanding, and ethical soundness.

IV. Ethical Considerations in LLM Training

The rise of LLMs and their increasing prominence across various applications necessitates a thorough examination of the ethical implications associated with their training and deployment. This ensures that the technology aligns with societal values and contributes positively to human progress.

A. Bias Mitigation Strategies

Bias in Training Data: A significant ethical concern in LLMs is the potential biases embedded within them, stemming from the data used for training. These biases can inadvertently perpetuate existing societal inequalities and prejudices.

Multifaceted Approach for Bias Mitigation: Addressing bias requires a comprehensive strategy that encompasses the evaluation of training datasets, ongoing monitoring of model outputs for biased tendencies, and the development of techniques specifically aimed at rectifying biases during the training phase.

B. Transparency and Explainability

Importance of Transparency: Transparency is paramount in building user trust. Users should have a clear and comprehensive understanding of how LLMs function and arrive at specific decisions.

Enhancing Explainability: This involves devising methods that trace model decisions back to the original inputs, thereby making the decision-making process more understandable and interpretable for both users and stakeholders.

C. User Privacy and Data Security

Balancing Data Collection and Privacy: The collection and utilization of data for training LLMs brings forth concerns regarding user privacy. Striking the right balance between collecting data to improve models and safeguarding user privacy is essential.

Implementing Privacy-Preserving Measures: This can be achieved by anonymizing data, enacting stringent access controls, and utilizing encryption methods to secure user information and prevent unauthorized access.

D. Avoidance of Harmful Use Cases

Proactive Assessment of Societal Impacts: LLM training should involve a proactive approach in identifying and mitigating potential harmful use cases. Assessing the possible societal impacts and ramifications of LLM applications is crucial.

Collaborative Framework Development: Engaging with ethicists, policymakers, and the broader community to establish comprehensive guidelines and frameworks can prevent malicious uses and unintended negative consequences.

E. Inclusive Representation in Training Data

Importance of Diverse Voices: Ensuring a diverse range of voices, perspectives, and cultural nuances are represented in the training data is vital. This helps prevent the reinforcement of existing biases and guarantees equitable performance across various demographic groups.

Continuous Improvement and Updating: LLMs should be continually updated and improved upon to ensure that they adapt to and accurately represent the evolving societal and cultural landscapes.

F. Addressing Hallucinations in Model Outputs

Challenge of Hallucinations: One emerging ethical concern is the tendency of LLMs to “hallucinate,” or generate false information that appears plausible. This can lead to the dissemination of incorrect data, potentially causing harm in scenarios where accuracy is critical.

Mitigating Hallucinations: To tackle this, it’s crucial to incorporate methodologies in LLM training that identify and reduce the occurrence of hallucinations. This involves refining training datasets, improving model architectures, and implementing robust validation checks. Regular monitoring and updating of the models to identify and correct such errors are essential.

User Awareness: It’s also important to educate users about this aspect of LLMs, ensuring they approach model outputs with a critical eye, especially in high-stakes scenarios.

G. Collaborative Efforts for Ethical Standards

Community Engagement: Addressing ethical considerations in LLM training is a collaborative effort involving developers, researchers, policymakers, and the broader public.

Comprehensive Strategies: By implementing bias mitigation strategies, transparency measures, privacy safeguards, and addressing challenges like hallucinations, the development and deployment of LLMs can align with ethical standards.

Trust and Responsibility: This fosters a sense of trust and promotes responsible use, ensuring that LLMs are beneficial tools in our ever-evolving technological landscape.

X. Continuous Learning and Adaptation in LLMs

In the rapidly evolving field of AI and LLMs, continuous learning and adaptation are essential for maintaining the relevance and effectiveness of these models. SmartOne plays a vital role in this ongoing process with its expert data labeling services. This section discusses how our approaches contribute to the continuous learning and adaptation of LLMs.

A. Importance of Quality Data Labeling for Continuous Learning

Continuous learning in LLMs is fundamentally reliant on the quality and relevance of the training data they receive. As language evolves and new data emerges, it’s essential for LLMs to receive updated datasets to learn from.

Solution: Dynamic Data Labeling Processes

SmartOne offers dynamic data labeling services that adapt to the evolving linguistic landscape. We ensure that the training data for LLMs is not only high-quality but also reflects the latest trends and usage patterns. Our commitment to providing continually updated, annotated data allows LLMs to adapt and stay current with linguistic developments.

B. Feedback-Driven Data Labeling

Gaining insights from the real-world applications of LLMs is critical for identifying areas where data labeling requires refinement or expansion.

Solution: Responsive Data Labeling Framework

Our data labeling framework is intentionally designed to be responsive to the feedback we receive. This agility allows us to quickly adjust labeling strategies to the changing requirements of LLMs, ensuring that the training data remains both relevant and effective.

C. Supporting LLM Adaptation to Contextual and Cultural Variations

Language is inherently diverse, varying significantly across different cultures and contexts. To ensure the effectiveness of LLMs in such varied settings, data labeling must account for these linguistic differences.

Solution: Culturally Diverse Data Annotation

At SmartOne, we employ a team of annotators from diverse cultural backgrounds. This diversity enriches our data labeling process, enabling LLMs to adapt to and understand a vast array of linguistic nuances and cultural variations.

D. Aligning with Latest Developments in AI and LLMs

As the fields of AI and LLMs continue to advance, the requirements for data labeling and project execution evolve correspondingly. Staying abreast of these technological advancements is essential for delivering effective data labeling and innovative solutions in LLM applications.

Solution: Training the Launch/Project Team for Innovative Solutions

Focus on Project Team Expertise: At SmartOne, we prioritize the continuous training and development of our Launch/Project Team, ensuring they are well-versed in the latest trends and advancements in LLM technology. This knowledge is crucial in guiding the overall direction of projects and in making informed decisions at every stage of the data labeling and LLM training process.

Expertise in Various Use Cases: Our team’s expertise spans a wide array of LLM use cases, allowing us to offer tailored and cutting-edge solutions to our clients. This expertise is continuously enhanced through regular training sessions, workshops, and industry conferences.

Challenging and Innovating with Clients: With our deep understanding of LLM capabilities and trends, we are uniquely positioned to challenge our clients with new ideas and designs. Our project teams use their knowledge to propose innovative approaches and solutions, pushing the boundaries of what’s possible with LLM technology.

Collaboration and Continuous Improvement: We foster an environment of collaboration and continuous improvement, where our project teams are encouraged to share knowledge, learn from each other, and stay ahead of the curve. This collaborative approach ensures that our clients benefit from the most advanced and effective LLM strategies.

XI. Applying LLMs into Industry Applications

LLMs have proven invaluable in various domains such as content generation, summarization, natural language interfaces, and sentiment analysis. Their ability to generate human-like text across different styles and tones has revolutionized content creation. Similarly, their proficiency in summarizing lengthy documents has greatly improved information retrieval processes. Moreover, natural language interfaces powered by LLMs have transformed user interactions with technology. Their precision in sentiment analysis has also provided valuable insights into public perception and opinions (Brown et al., 2020).

In healthcare, LLMs can assist in diagnosing diseases and providing personalized treatment recommendations based on a patient’s symptoms and medical history (Esteva et al., 2019). They can also improve the efficiency of clinical trials by analyzing vast amounts of data and identifying patterns that may lead to new breakthroughs.

For example, in the finance sector, LLMs can help investors make informed decisions by analyzing market trends, news articles, and financial reports. They can also assist in detecting fraudulent activities by scanning through large volumes of transactions and flagging suspicious patterns (Arner et al., 2017).

In addition, LLMs have promising applications in customer service. They can be used to develop chatbots that provide accurate responses to customer queries, resulting in enhanced user experiences and reduced workload for human agents.

XII. Integrating LLMs into Augmented Reality

Augmented reality (AR) technology has the potential to revolutionize human-computer interaction by merging virtual elements with the real world. LLMs can play a significant role in enhancing this experience further.

Improved Information Retrieval: With AR, users can access additional information about their surroundings in real-time. By integrating LLMs into this process, users will have access to a wealth of knowledge through natural language queries (Azuma, 1997).

Enhanced Virtual Assistance: Combining AR with LLMs enables more intuitive and interactive virtual assistants. Users can converse naturally with these digitally-rendered objects or characters, who will retrieve accurate information instantly from immense datasets provided by the language models.

Contextualized Language Understanding: Through sophisticated visualization techniques offered by AR, LLMs gain a contextual understanding of user instructions within their environment’s spatial and temporal dimensions—a factor that improves accuracy during interactions.

XIII. Future Research Directions in LLMs

The field of LLMs is rapidly evolving, driven by continuous advancements in technology and research. This section explores the anticipated future trends and potential research directions that are set to shape the development of LLMs.

A. Towards Greater Model Efficiency

One of the significant trends in LLM research is the push towards more efficient models. As LLMs grow in size and complexity, the computational resources required to train and run these models escalate.

Lightweight Models and Algorithmic Innovations

Future research is expected to focus on developing lightweight models that require less computational power without sacrificing performance. This includes algorithmic innovations that streamline model architectures and data processing techniques, making LLMs more accessible and sustainable.

B. Enhanced Multimodal Capabilities

LLMs have traditionally excelled in processing text, but the future lies in their ability to understand and generate content across multiple modalities, such as images, audio, and video.

Advanced Multimodal LLMs

Research is gearing towards creating LLMs that can seamlessly integrate information from various sensory inputs, providing a more holistic understanding of complex data. This advancement will open new avenues in AI applications, from enhanced virtual assistants to sophisticated content creation tools.

C. Improving Model Explainability and Transparency

As LLMs become more integrated into critical sectors, the need for model explainability and transparency becomes paramount.

Explainable AI (XAI)

Future research will likely focus on developing methods and frameworks for Explainable AI (XAI) in the context of LLMs. This includes creating models whose decision-making processes are interpretable and understandable by humans, ensuring trust and accountability in AI systems.

D. Ethical AI, Bias Mitigation, and Data Privacy

The ethical implications of AI, particularly in terms of bias and fairness, continue to be a crucial area of focus.

Ethical Frameworks and Diverse Data Sets

Research will continue to focus on developing ethical frameworks and guidelines, especially in terms of bias and fairness. This includes creating more diverse and representative training datasets and techniques for detecting and mitigating biases in AI models.

Data Privacy Considerations

Additionally, future research will increasingly address data privacy within these frameworks. Ensuring that LLMs are trained on data that respects user consent and privacy norms will be a key focus, alongside bias mitigation.

E. Expanding Real-World Applications and Industry Integration

LLMs are set to become more deeply integrated into various industries, transforming how businesses operate and how services are delivered.

Industry-Specific LLMs and Collaboration

Future trends include developing industry-specific LLMs tailored to the unique needs of sectors like healthcare, finance, and legal. Collaborations between AI researchers and industry experts will be key in driving these developments.

F. Advancements in Federated Learning and Privacy-Preserving Techniques

As data privacy continues to be a major concern, federated learning and other privacy-preserving techniques will become increasingly important in LLM training.

Secure Data Sharing and Decentralized Learning Models

Research will focus on enhancing federated learning algorithms and developing new methods for secure data sharing and decentralized learning. These advancements aim to ensure user privacy while benefiting from collective learning.

Incorporating Privacy in LLM Training

Privacy-preserving techniques will become critical in LLM training, addressing growing concerns over data privacy in AI development.

As the landscape of LLMs continues to evolve with these future trends and research directions, the ongoing development and refinement of these technologies promise to unlock even more innovative applications and capabilities.

XIV. The Bright Future of LLMs

A. Training Strategies for Improving LLM Performance

Training LLMs on diverse and representative data that encompasses a wide range of topics, perspectives, and language styles is crucial for improving their performance. This rich dataset enables LLMs to effectively handle various user inputs and better understand different types of queries or requests (Vaswani et al., 2017). Active learning techniques can also significantly enhance LLM performance. By selecting unlabeled examples from the dataset based on uncertainty measures or prediction confidence scores, trainers can concentrate annotator resources on challenging instances that will likely result in higher performance gains. Additionally, curriculum learning, where trainers expose models to gradually increasing levels of complexity during training, can also be beneficial. This method enables LLMs to first comprehend simpler patterns before progressing to more complex linguistic structures, thereby laying a strong foundation for handling challenging tasks (Bengio et al., 2009).

B. Improving Quality and Diversity of Data for LLMs

For LLMs to truly excel, their training data must be of the highest quality, encompassing diversity in terms of topics, language styles, and perspectives. Enhanced data labeling techniques are vital to ensure that the information is accurate, comprehensive, and free from biases. Additionally, active learning methodologies can be applied to data labeling, allowing annotators to selectively focus on data points that are most beneficial for the model’s performance. This approach can significantly improve the quality and diversity of the training dataset. Continuous evaluation and feedback are also essential in maintaining the quality of the dataset and ensuring that the model is performing optimally (Vaswani et al., 2017).

C. The Role of Human-in-the-Loop in Training LLMs

Incorporating human expertise into the training loop is essential for refining LLMs and enhancing their performance. Human annotators bring valuable insights and knowledge that can greatly improve the model’s understanding of context, semantics, and nuances within the language. This human-in-the-loop approach ensures that the model’s outputs are accurate, relevant, and culturally appropriate (Brown et al., 2020).

D. Optimizing Training Efficiency and Performance of LLMs

Optimizing the training process of LLMs is vital for achieving maximum efficiency and performance. Active learning strategies, such as uncertainty sampling, query by committee, and human-in-the-loop with active learning, can significantly enhance the model’s learning curve and improve overall performance. These methodologies prioritize the most informative and challenging data points, allowing the model to learn more effectively and efficiently (Bengio et al., 2009).

Final Thoughts

In navigating the expansive landscape of LLMs, the intricate interplay between data, training methodologies, and ethical considerations emerges as a foundational element for success. The multifaceted aspects of LLM development, from diverse data labeling and training strategies to the ethical considerations of responsible deployment, are crucial to harnessing the full potential of these advanced AI systems.

In this new era of natural language processing, the collaboration between machine intelligence and human expertise is a linchpin for success. The significance of diverse and representative data, underscored by continuous evaluation and human-in-the-loop methodologies, is paramount. Such human-guided processes are pivotal in shaping LLMs into sophisticated entities capable of navigating the complexities of language and cultural nuances.

The exploration of ethical considerations highlights the importance of transparent data labeling practices and a commitment to addressing biases and privacy concerns. This approach lays the groundwork for a more trustworthy and accountable AI ecosystem.

The adoption of advanced data labeling techniques, including those offered by SmartOne.ai, is instrumental in realizing the full potential of LLMs. SmartOne.ai’s real human-in-the-loop services, such as data annotating, labeling, and tagging, provide the necessary human insight and expert curation that AI teams need to optimize their AI models.

By leveraging the expertise and experience of SmartOne.ai, organizations can navigate the complexities of LLM development, ensuring that their models are robust, accurate, and ethically sound. This collaboration between human expertise and AI innovation is not just beneficial; it is essential for the continued advancement and success of LLMs in today’s dynamic landscape of natural language processing and artificial intelligence.

Contact Us

Struggling to deliver quality LLM data labeling on time and within budget? SmartOne offers the solution. Our experienced team, proven process, and purpose-built platform help companies maximize value from LLM while minimizing costs and risks.

Whether starting or accelerating ML projects, we help you realize the full benefits of LLM efficiently. Choose the global data labeling experts dedicated to quality, speed, and affordability. Choose SmartOne.

References

Devlin, J., Chang, M. W., Lee, K., & Toutanova, K. (2019). BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, 1, 4171–4186.

Brown, T. B., Mann, B., Ryder, N., Subbiah, M., Kaplan, J., Dhariwal, P., … & Amodei, D. (2020). Language models are few-shot learners. arXiv preprint arXiv:2005.14165.

Bender, E. M., Gebru, T., McMillan-Major, A., & Shmitchell, S. (2021). On the dangers of stochastic parrots: Can language models be too big?. In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, 610-623.

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., … & Polosukhin, I. (2017). Attention is all you need. Advances in neural information processing systems, 30.

Hochreiter, S., & Schmidhuber, J. (1997). Long short-term memory. Neural computation, 9(8), 1735-1780.

Wang, Y., Sun, Y., Liu, Z., Sarma, S. E., Bronstein, M. M., & Solomon, J. M. (2019). Dynamic graph CNN for learning on point clouds. ACM Transactions on Graphics (TOG), 38(5), 1-12.

Esteva, A., et al. (2019). A guide to deep learning in healthcare. Nature Medicine, 25(1), 24-29.

Arner, D. W., et al. (2017). FinTech and RegTech in a Nutshell, and the Future in a Sandbox. CFA Institute Research Foundation.

Azuma, R. T. (1997). A survey of augmented reality. Presence: Teleoperators & Virtual Environments, 6(4), 355-385.

Glossary of Terms

Large Language Models (LLMs): Advanced AI models specialized in processing, understanding, and generating human language based on large datasets. Examples include GPT-3 and BERT.

Data Labeling: The process of identifying and tagging raw data (like text or images) with relevant labels to help AI models understand and learn from this data.

Annotations: Notes or labels added to data, providing context, categorization, or explanations, crucial for training AI models, especially in natural language processing.

Synthetic Data: Artificially generated data that mimics real-world data, used to train AI models where real data is scarce, sensitive, or requires augmentation.

Human-in-the-Loop (HITL): A model of workflow where human judgment is integrated with AI operations, ensuring quality control and contextual understanding in AI applications.

Active Learning: A training approach for machine learning models where the model actively queries a user to label new data points with the most informational value.

Federated Learning: A machine learning technique where the model is trained across multiple decentralized devices or servers, enhancing privacy and data security.

Bias Mitigation: Techniques and practices aimed at reducing biases in AI models, ensuring fairness and ethical integrity.

Multimodal Data: Data that comes in various forms or modalities, such as text, images, audio, etc., used in AI to provide a richer learning context.

Transfer Learning: A machine learning method where a model developed for one task is reused as the starting point for a model on a second task.

Differential Privacy: A system for publicly sharing information about a dataset by describing patterns of groups within the dataset while withholding information about individuals in the dataset.

Natural Language Processing (NLP): A branch of AI that focuses on enabling computers to understand, interpret, and respond to human language in a useful way.

Curriculum Learning: A type of learning in which AI models are gradually exposed to increasing levels of difficulty in training data, enhancing learning efficiency.

Explainability: The extent to which the internal mechanisms of a machine or deep learning system can be explained in human terms.

Augmented Reality (AR): An interactive experience where real-world environments are enhanced with computer-generated perceptual information.

Graph Neural Networks (GNNs): A type of neural network designed to capture dependencies in graph-structured data. They are used for tasks that involve relational information, such as social networks or molecule structures.

Transformer Architecture: A neural network architecture based on self-attention mechanisms. It has been highly influential in NLP for tasks like translation, text summarization, and question-answering.

Reinforcement Learning (RL): An area of machine learning concerned with how agents ought to take actions in an environment to maximize the notion of cumulative reward.

Generative Adversarial Networks (GANs): A class of machine learning frameworks where two neural networks contest with each other in a game, typically used in unsupervised learning and generative models.

Unsupervised Learning: A type of machine learning that looks for previously undetected patterns in a dataset without pre-existing labels.

Supervised Learning: A type of machine learning where the model is provided with labeled training data, and the goal is to learn a mapping from inputs to outputs.

Semi-Supervised Learning: A class of techniques that make use of both labeled and unlabeled data for training. These methods can significantly improve learning accuracy with limited labeled data.

Fine-Tuning: A process of making minor adjustments to a pre-trained model to adapt it to a specific task or dataset, commonly used in transfer learning scenarios.

Hyperparameter Tuning: The process of selecting the set of optimal parameters for a learning algorithm, often crucial for enhancing the performance of machine learning models.

Natural Language Understanding (NLU): A subfield of NLP focused on machine reading comprehension, concerned with the ability of a program to understand human language in the form of sentences or conversational contexts.

Model Interpretability: The extent to which a human can understand the cause of a decision made by a machine learning model. It’s crucial for diagnosing model behavior and ensuring fairness and transparency.

Privacy-Preserving Machine Learning: Techniques in machine learning that protect the privacy of individuals’ data while allowing models to learn from the data. Methods include differential privacy, federated learning, and homomorphic encryption.

Stochastic Gradient Descent (SGD): A widely used optimization method in machine learning and deep learning for training models, particularly effective for large datasets.

Attention Mechanisms: Components in neural networks that weigh the relevance of different input parts differently, improving the model’s focus on important elements. They are key in Transformer models.

Tokenization: The process of converting text into smaller units (tokens), typically words or subwords, which can be further processed or understood by algorithms.

Recent articles

Generative AI summit 2024

Generative AI summit 2024

Client Case Study: Automated Accounting for Intelligent Processing

Client Case Study: Automated Accounting for Intelligent Processing

Client Case Study: AI Logistics Control

Client Case Study: AI Logistics Control

Client Case Study: Inquiry Filter for a CRM Platform

Client Case Study: Inquiry Filter for a CRM Platform

Client Case Study: Product Classification

Client Case Study: Product Classification

Client Case Study: Interius Farms Revolutionizing Vertical Farming with AI and Robotics

Client Case Study: Interius Farms Revolutionizing Vertical Farming with AI and Robotics

Client Case Study: Virtual Apparels Try-On

Client Case Study: Virtual Apparels Try-On

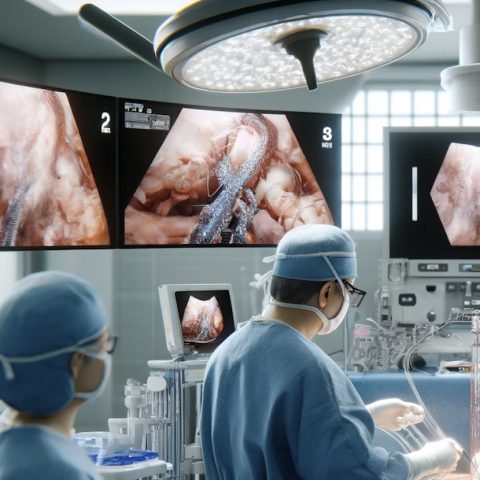

Client Case Study: SURGAR Delivering Augmented Reality for Laparoscopic Surgery

Client Case Study: SURGAR Delivering Augmented Reality for Laparoscopic Surgery

Client Case Study: Drone Intelligent Management

Client Case Study: Drone Intelligent Management

Client Case Study: Query-item matching for database management

Client Case Study: Query-item matching for database management