In the 1960s, Maiman and Lamb from Hughes Lab pioneered the world’s first laser, a ruby laser emitting red light at 694.3nm. As laser technology progressed, so did LiDAR (Light Detection and Ranging), which utilizes lasers for detection.

Initially, LiDAR was primarily used in scientific research, such as meteorological detection and topographic mapping of oceans and forests.

In the 1980s, with the introduction of scanning structures, LiDAR’s field of view expanded, finding applications in commercial domains like autonomous driving, environmental perception, and 3D modeling, becoming a core technology in these areas.

What is LiDAR?

Definition and Characteristics of LiDAR

LiDAR (Light Detection And Ranging) is an active detection system that uses lasers as its emission source. It measures the position, velocity, and shape of targets by emitting laser beams and receiving the signals reflected back from the targets.

Compared to other sensors like cameras, LiDAR has the following characteristics:

- High accuracy and resolution: LiDAR provides high-precision distance and angle measurements, generating high-resolution point clouds.

- Strong adaptability: LiDAR can operate in various conditions, including day, night, rain, and fog, without being limited by lighting conditions. Depending on the design and function, LiDAR can be used in a wide range of applications, from atmospheric detection to autonomous driving.

- Integration and reliability: Solid-state LiDAR is easier to integrate into systems, reducing mechanical wear and improving reliability.

- Cost and technological progress: LiDAR costs are relatively high, and the level of technological maturity varies, ranging from expensive multi-line mechanical systems to more economical solid-state solutions.

Importance of LiDAR Data in Various Industries

With its high resolution, real-time performance, and accuracy, LiDAR data has become a key element in driving modern technological progress and the intelligent transformation of industries.

In the automotive domain, LiDAR provides high-precision 3D environmental mapping, enabling vehicles to perceive surrounding obstacles in real-time and ensure safe navigation.

Similar to self-driving technology, LiDAR equips robots with precise obstacle avoidance and positioning capabilities in the robotics field. Both indoor service robots and material handling robots in industrial automation rely on LiDAR for efficient operation.

Even traditional industries like agriculture are undergoing intelligent transformation. In precision agriculture, LiDAR data is used for crop height monitoring, terrain analysis, and optimizing seeding and fertilization paths to improve production efficiency. Interested in learning more, discover 10 more fun LiDAR applications.

LiDAR Point Cloud Data Acquisition and Formats

LiDAR data is presented in the form of 3D point clouds. A point cloud is a collection of numerous discrete 3D points, each containing coordinates (X, Y, Z) and possible additional attributes such as reflection intensity and color.

Taking Time-of-Flight (ToF) technology as an example, obtaining LiDAR point cloud data first requires the LiDAR system to emit laser pulses toward the target. These pulses are reflected back when they encounter objects. The receiver in the system captures these reflected light pulses and records the time difference, i.e., the round-trip time of the light pulses. The distance to the target is calculated using the speed of light and the round-trip time. By combining this with the scanning angle information, the 3D coordinates of each point are determined.

Common point cloud data formats include:

- PCD (Point Cloud Data): Provided by PCL (Point Cloud Library), suitable for computer vision and robotics, flexible, and supports various data structures.

- LAS/LAZ: Developed by the ASPRS (American Society for Photogrammetry and Remote Sensing), a standard storage format for point cloud data, widely used in Geographic Information Systems (GIS). LAZ is a compressed version of LAS, saving storage space.

- PTS/PTX: Simple formats for storing point cloud data, usually containing XYZ coordinates and sometimes RGB color information.

- ASC/XYZ: Text formats, simple and straightforward, with data for each point arranged by XYZ coordinates, sometimes including additional information such as intensity or color.

Essential Point Cloud Algorithms

Efficiently and accurately processing point cloud data is key to implementing applications like autonomous driving and robot navigation.

This section will introduce the basic algorithms for point cloud processing, including point cloud pre-processing and final applications.

Point Cloud Pre-processing

Pre-processing point cloud data is a critical step that directly affects the accuracy and efficiency of subsequent analysis and applications. The goal is to remove noise, simplify data structures, and adjust the position and orientation of point clouds for better results.

The main pre-processing techniques include:

Point Cloud Denoising

Denoising removes noisy points caused by measurement errors, environmental interference, or hardware limitations.

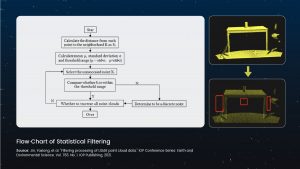

Statistical filtering is a common method that identifies and removes outliers by analyzing the local density or distance distribution of points, assuming that noisy points are far from their neighbors, while real points are closely related.

Radius filtering, such as remove_radius_outlier in Open3D, determines and removes noisy points based on the number of points within a fixed radius of each point, assuming that real points have more neighbors than noisy points.

Point Cloud Down-Sampling

Down-sampling reduces the amount of point cloud data and improves processing speed while preserving the geometric features of the point cloud.

Voxel down-sampling is a common technique that divides the space into voxels (3D pixels) and merges the points within each voxel into a representative point, usually the center point or centroid. The voxel_down_sample function in Open3D uses this principle, reducing the number of points and simplifying the data structure based on the voxel size.

Other simple and efficient methods include uniform and random down-sampling.

Coordinate Transformation

Coordinate transformation adjusts the position and orientation of point clouds, including translation and rotation.

Common coordinate systems include world, sensor, and object coordinate systems. Transformation is achieved through rotation matrices (Euler angles or Quaternions) and translation vectors, which describe the relative rotation and displacement between coordinate systems.

Combining the rotation matrix and translation vector creates a transformation matrix to convert the point cloud from one coordinate system to another, crucial for aligning point clouds from different perspectives and matching or integrating multi-source data.

Point Cloud Classification and Detection

After pre-processing, point cloud data can be used to build training data for various high-level applications, such as classification and object detection, which identify different objects or features in the point cloud.

Supervised Learning Methods

Supervised learning in point cloud classification combines feature engineering and traditional machine learning algorithms.

Features such as surface normals, local density, and distance statistics are extracted from the point cloud and used as input for training and classification through algorithms like Support Vector Machines (SVM), Random Forests, and K-Nearest Neighbors (KNN).

This method relies on manually designed features, and its performance is limited by the effectiveness and generality of the features.

For example, Histogram of Oriented Gradients (HOG) or Fast Point Feature Histograms (FPFH) can be used as features, and then trained through classifiers to distinguish different point cloud categories.

Deep Learning Methods

Deep learning, especially Convolutional Neural Networks (CNNs) and point cloud-specific networks like PointNet, PointNet++, and Dynamic Graph CNN (DGCNN), have made significant progress in point cloud classification and detection. These methods directly process raw point cloud data without manual feature engineering.

For point cloud detection, methods such as Multi-View PointNet (MVP) and Votenet locate and identify objects through multi-view fusion or direct voting on point clouds, which is particularly important in autonomous driving and industrial inspection.

Deep learning methods can automatically learn features and handle complex and varying point cloud structures but require a large amount of annotated data for training.

Point Cloud Segmentation

Point cloud segmentation divides point cloud data into subsets with the same attributes or features, helping to understand scene structure and extract specific objects.

Region Growing

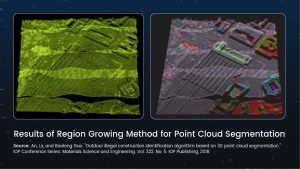

Region growing is a segmentation method based on adjacency relationships.

It starts from seed points and gradually expands to neighboring points that meet specific similarity criteria, such as distance or feature difference. When a newly added point is sufficiently close to the features of the current region, it is merged into that region.

This method is simple and intuitive, but the choice of appropriate growth conditions and initial seed points greatly affects the results, and it is easily affected by noise.

Clustering

Point cloud clustering groups point cloud data into clusters, with each cluster representing a possible object or feature region.

Common clustering algorithms include Density-Based Spatial Clustering of Applications with Noise (DBSCAN), Mean Shift, and spectral clustering.

DBSCAN is based on the density of points and can automatically discover clusters of arbitrary shapes, while Mean Shift finds high-density regions of data points through an iterative process.

These clustering algorithms can effectively handle noise and irregular distributions in point clouds and are very practical techniques in point cloud segmentation.

Similar to classification and detection, point cloud segmentation also requires a large amount of annotated data for algorithm training and evaluation.